A reliable review ritual turns scattered effort into steady progress. “Weekly and monthly reviews” are short, structured sessions where you look back at what happened, measure movement on goals, and deliberately reset your plan for the next cycle. In practice, a weekly review tightens your execution, while a monthly review re-examines direction. Do both, and you catch drift early, allocate time intelligently, and make better trade-offs. Below you’ll find 12 concrete steps—each actionable alone, powerful together—to help you reflect on goals and adjust plans with clarity.

Quick recap of the 12 steps

- Set cadence & scope

- Close open loops

- Reconnect tasks to goals/OKRs

- Inspect metrics

- Audit calendar & time blocks

- Re-prioritize with a matrix

- Capacity & commitment check

- Risks & dependencies

- Monthly retrospective

- Budget & resources

- System reset & archiving

- Experiments & habit tweaks.

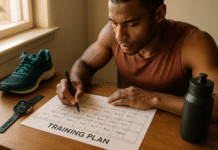

1. Lock Your Cadence and Scope (Weekly = Execution; Monthly = Direction)

Decide exactly when you’ll review and what each session covers; this clarity is the backbone of consistency. The weekly review keeps work flowing—think execution hygiene, next actions, and short-term priorities—while the monthly review steps back to validate goals, assumptions, and strategy. Put both on your calendar as repeating events, and treat them like immovable meetings with your future self. As a guardrail, schedule 45–60 minutes for the weekly review and 90–120 minutes for the monthly. This split respects cognitive load: short to maintain momentum, longer to rethink direction. By committing to fixed times and scopes, you prevent reviews from slipping into either endless rumination or rushed box-ticking, and you create a dependable rhythm for continuous improvement.

1.1 How to do it

- Create two recurring calendar events: “Weekly Review” (e.g., Fridays 4:00–5:00 PM) and “Monthly Review” (last Friday, 90–120 minutes).

- Define a one-line purpose for each invite (weekly = “close loops + plan next week”; monthly = “measure goals + adjust plans”).

- Add prep reminders 2–4 hours earlier to gather inputs.

- Pre-attach your checklist and templates to each calendar event.

- Protect the time—decline or reschedule conflicts proactively, not reactively.

1.2 Numbers & guardrails

- Weekly: 45–60 minutes; aim for 80% completion of your checklist.

- Monthly: 90–120 minutes; reserve the first 30 minutes for data capture before discussion/decisions.

- Slip tolerance: allow at most a 24-hour shift; beyond that, run a shorter “catch-up” version and reset.

Close by reaffirming that cadence beats intensity; a shorter review done every time compounds faster than a perfect review done occasionally.

2. Close Open Loops: Capture, Clarify, and Park

Start by getting everything out of your head and into a trusted system; this immediately reduces stress and improves focus. “Open loops” are incomplete commitments—emails to answer, messages to send, tasks to finish, ideas to explore—that steal attention. In a weekly review, sweep inboxes (email, chat, paper notes, DMs), capture loose tasks, and clarify next actions. If something takes less than two minutes, do it; otherwise, park it in the right list with a verb-first next step. This step is not about doing the work; it’s about making trustworthy decisions so your next week begins with a clean slate. When you close loops consistently, the rest of the review runs faster and planning becomes realistic.

2.1 Mini-checklist

- Collect: Email, chat, physical notes, downloads folder, calendar invites, voicemail.

- Clarify: Is it actionable? If yes, define the very next physical action; if no, archive or incubate.

- Organize: Put actions into projects/contexts; date-specific items onto the calendar.

- Defer/Delegate: Assign owners and due dates; track handoffs in a “Waiting For” list.

- Zero the inputs: Aim for inbox zero or a clearly labeled “Review Later” bucket.

2.2 Common mistakes

- Doing, not deciding—burning half your review actually completing tasks.

- Capturing vague items like “marketing”—instead, write “Draft 3 ad headlines for Q3 promo.”

- Letting “someday” grow without pruning; review it monthly and cull aggressively.

Finish by noting that a reliable capture habit is a force multiplier: your brain trusts the system, so it lets go and focuses better.

3. Reconnect Your Tasks to Goals and OKRs

Make sure your daily tasks ladder up to clear goals; otherwise, productivity becomes motion without meaning. During the weekly review, reconcile each active project with the goals or OKRs it supports and identify 1–3 high-leverage next actions. In the monthly review, check whether your goals still fit reality: are the Objectives still the right ones, and are the Key Results measurable and relevant? Update or retire goals that no longer matter; promote experiments that proved value. This alignment step prevents the classic drift where busywork crowds out impact, and it guarantees that your plan for next week actually advances the outcomes you care about.

3.1 How to do it

- List each active project; write the goal/OKR it supports next to it.

- For each, pick 1–3 “moves the metric” actions for the coming week.

- For monthly: compare actuals vs Key Results; mark each KR as on track / at risk / off track.

- If a KR is off track, rewrite the leading indicator or change the approach; don’t just “try harder.”

- Archive projects that lack a current goal; resurrect only with a clear OKR tie-in.

3.2 Numbers & guardrails

- Weekly: no project should have more than 3 focus actions; excess signals lack of prioritization.

- Monthly: cap yourself at 3–5 active goals; dispersion kills momentum.

End by restating the aim: fewer, clearer goals—paired with crisp next actions—beat sprawling plans every time.

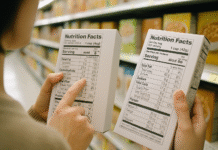

4. Inspect Metrics: Leading and Lagging Indicators

Use numbers to inform judgment, not replace it. In your weekly review, examine leading indicators (inputs you control soon), like deep-work hours, outreach sent, or prototype iterations; in the monthly review, pair those with lagging indicators (outcomes), like revenue, customer retention, grades, or PRs. Track both in a lightweight dashboard so you can differentiate “we did the right things” from “we got the right results.” When leading indicators are strong but lags are weak, you may need a longer time horizon; when both are weak, your plan needs surgery. The goal is a calm, evidence-based conversation about progress.

4.1 Tools & examples

- Personal: Track deep-work hours, sleep (7–9 hours target), and sessions completed.

- Team: Track cycle time, on-time delivery %, and NPS or CSAT.

- Example: If you target 10 outreach emails/week and hit 12, but monthly demos remain flat, revisit your targeting or follow-up scripts.

4.2 Numbers & guardrails

- Aim for 3–7 metrics total; beyond that, signal gets drowned in noise.

- Define a weekly “green range” for each leading metric (e.g., 12–15 deep-work hours).

- Review trends, not just snapshots—3- and 12-week rolling averages tell the real story.

Conclude by emphasizing that good metrics are decision tools: they prompt the right questions and shape your next move.

5. Audit Your Calendar and Reset Time Blocks

Your calendar is your actual strategy—inspect it like one. Each week, look back at your calendar and categorize hours: deep work, shallow work, meetings, admin, personal. Compare what you planned to what happened; the gap reveals your execution reality. Then reset your time blocks for the next week, placing the most important work when your energy peaks. Monthly, rethink the default template: Do you need a meeting-lite day? A writing morning twice a week? Remove ritualized meetings that no longer earn their place. This habit ensures you spend time on the work that actually moves goals.

5.1 How to do it

- Export last week’s calendar; color-tag categories.

- Compute rough percentages (e.g., deep work 32%, meetings 38%, admin 20%, personal 10%).

- Identify one “thief” block to eliminate and one “maker” block to protect.

- Schedule next week by blocking priority work first, then support work, then meetings.

- Add 15–20% buffer time; life happens.

5.2 Numbers & guardrails

- Aim for 12–20 deep-work hours/week (students may flex higher around exams).

- Cap meetings at 30–40% of working hours unless your role is primarily managerial.

- Maintain at least one 90–120 minute uninterrupted block daily.

Sum up by reminding yourself: if it’s not blocked, it’s wishful thinking; if it’s blocked and protected, it’s progress.

6. Re-prioritize with a Simple Matrix (Urgent/Important)

Before you finalize next week’s plan, re-rank work using a lightweight matrix. Map tasks into four boxes—Important & Urgent, Important & Not Urgent, Not Important & Urgent, Not Important & Not Urgent—and let that guide your scheduling and delegation. Weekly, commit to shipping items in Important & Urgent and protect time for Important & Not Urgent (the compounding quadrant). Quickly delegate or batch the Urgent/Not Important items and delete or archive the rest. Monthly, review whether your system is producing enough Not-Urgent/Important work; if not, you’re living in reaction mode. This matrix makes trade-offs visible and keeps the next week from becoming a fire drill.

6.1 Mini-checklist

- Identify: Tag each task with I/U status.

- Act: Schedule I&U first, then I&¬U.

- Decide: Delegate U&¬I; delete ¬I&¬U.

- Validate: Ask, “If I skipped this for 30 days, what breaks?”

- Review: Move any orphan tasks back into projects or archive.

6.2 Common mistakes

- Putting everything in “important” to avoid saying no.

- Treating the matrix as a one-time exercise; it should inform weekly blocking.

- Confusing others’ urgency with your importance; align to your goals first.

Wrap up by noting that smart priority isn’t about doing more; it’s about protecting what matters.

7. Check Capacity and Set Commitments You Can Keep

Plan to your true capacity, not your idealized self. Start with available working hours for the coming week, subtract fixed commitments (meetings, classes), add buffer (15–20%), and only then fill remaining slots with tasks. Weekly, this prevents overbooking; monthly, it exposes structural issues—like too many projects or insufficient staffing—that require negotiation or redesign. Use simple Work-in-Progress (WIP) limits to cap concurrent tasks and projects. When you right-size commitments, you preserve quality, hit deadlines, and reduce stress.

7.1 Numbers & guardrails

- Buffer: 15–20% of weekly working hours.

- WIP: No more than 3 active projects per person at once (solo); 5–7 for teams with shared capacity.

- Task sizing: Aim for tasks you can complete in 25–120 minutes; split anything larger.

7.2 How to do it

- Calculate: Available hours − (meetings + admin) − buffer = capacity.

- Load: Fill capacity with time-blocked tasks from your prioritized list.

- Negotiate: If demand > capacity, escalate trade-offs; don’t silently overcommit.

- Track: Use a kanban board with explicit WIP limits to visualize flow.

Close by stating the principle: under-commit and over-deliver beats the reverse, and honest capacity planning makes it possible.

8. Surface Risks, Dependencies, and Blockers

Treat uncertainty as work, not background noise. Each review, list blockers (things stopping progress), dependencies (work you’re waiting on), and risks (things that could go wrong). For blockers and dependencies, assign an owner and a deadline to unblock. For risks, write a simple “prevent/mitigate” note and decide whether to change scope or sequence. Weekly, this keeps execution smooth; monthly, it informs roadmap changes and stakeholder expectations. By making uncertainty explicit, you reduce surprises and build resilient plans.

8.1 Mini-checklist

- Blockers: Who/what is in the way? What’s the next escalation step?

- Dependencies: What are you waiting for? Can you parallelize or stub?

- Risks: What has ≥20% chance of impact? What’s the earliest warning signal?

- Decisions: Which risks justify scope change or added buffer?

8.2 Tools & examples

- Use a “Waiting For” list and a simple RAID log (Risks, Assumptions, Issues, Dependencies).

- Example: You depend on design assets by Wednesday; if late, ship text-only v1 and hot-swap visuals later.

End by recognizing that forecasting beats reacting: when you tame uncertainty, schedules stop slipping in silence.

9. Run a Monthly Retrospective (Start/Stop/Continue + Hypotheses)

Once a month, step back and review the system itself. Use a simple retrospective framework—Start/Stop/Continue or the 4Ls (Liked, Learned, Lacked, Longed for)—to surface patterns, not just incidents. Convert insights into a few “try statements” (e.g., “We believe moving stand-ups to 11:00 will reduce context switching; we’ll know by a 15% drop in after-hours Slack”). Keep it blameless and specific. The output should be small experiments that change how you work, not just a list of complaints. Over time, these retros build a culture of continuous learning.

9.1 How to do it

- Invite the right voices; keep the room safe and candid.

- Timebox: 20 minutes for data/insights, 20 for themes, 20 for actions.

- Produce 1–3 experiments with clear measures and owners.

- Revisit last month’s experiments first—did they work?

9.2 Numbers & guardrails

- Cap “action items” at 3; more usually means none get done.

- Require each experiment to have a measurable hypothesis and a review date.

Bring it home by committing to iteration: one small, measured improvement each month compounds into major gains.

10. Review Budgets and Resource Allocation

Your goals live on time, money, and energy—inspect all three. Weekly, skim small expenses, time costs, and energy drains; monthly, look for patterns and reallocate. Cancel tools you don’t use, fund the ones that save hours, and ensure you’re investing in the few initiatives that actually improve your metrics. For personal goals, this might mean budgeting for tutoring or a course; for teams, it may mean shifting headcount or vendor spend. Numbers don’t decide for you, but they expose trade-offs you must make consciously.

10.1 Mini-checklist

- Money: Subscriptions, SaaS seats, freelance hours—keep/cut/upgrade.

- Time: Meetings or tasks with poor ROI—eliminate, automate, or batch.

- Energy: Identify tasks that drain disproportionately—delegate or time-box.

- Invest: Redirect freed resources to the 1–2 initiatives that move your Key Results.

10.2 Numbers & guardrails

- Trim at least 5–10% of spend or time each quarter by pruning low-value items.

- Track cost per outcome where sensible (e.g., cost per lead, hours per feature).

- Budget a small learning R&D line (e.g., 1–3% of time/money) for skill growth.

Sum up by stressing intentionality: resource decisions signal strategy—make them on purpose, not by inertia.

11. Reset Systems, Templates, and Documentation

Cluttered systems slow you down; a monthly reset keeps them lean. Archive completed projects, merge duplicate lists, and refresh templates with what you’ve learned. Check automations (rules, filters, zaps) for accuracy; stale automations create hidden errors. Update your “operating manual” (how you plan, how you run meetings, how you ship), even if it’s just a page. Weekly, do a light version: clear notifications, rename messy tasks, and tidy your kanban. This step protects future clarity and reduces friction you’d otherwise pay for all month.

11.1 Mini-checklist

- Archive: Close out finished projects and tag them for easy reference.

- Refactor: Rename lists and consolidate overlapping boards or databases.

- Template-ize: Save repeatable agendas, checklists, and email drafts.

- Automate: Fix or remove brittle rules; document the ones that remain.

- Back up: Export critical docs and dashboards monthly.

11.2 Common mistakes

- Keeping everything “just in case,” which turns systems into junk drawers.

- Letting templates stagnate; they should evolve with your reality.

- Over-automating fragile workflows; prefer a few robust rules to a maze of triggers.

Finish by remembering: clean systems make good habits easy and bad habits obvious.

12. Plan Experiments and Habit Tweaks (Implementation Intentions & WOOP)

End each review by choosing a few small behavioral bets, then wire them into your week. Use implementation intentions (“If it’s 8:30 AM and I’ve opened my laptop, then I start the 90-minute writing block”) to make desired actions automatic. Pair them with WOOP (Wish, Outcome, Obstacle, Plan) to anticipate what will get in the way and pre-decide responses. Think of these as controlled experiments with clear success criteria. Weekly, you’ll adjust tactics; monthly, you may add or retire habits based on results. This approach transforms vague intentions into practical routines that withstand real-world friction.

12.1 How to do it

- Write 1–3 “If-Then” plans tied to cues you reliably encounter.

- Run a quick WOOP: state the wish and outcome, list the likely obstacle, write the plan.

- Track adherence (Y/N) daily; review weekly.

- Keep each experiment to 2–4 weeks; extend only if it’s working.

12.2 Numbers & guardrails

- Limit to 3 active behavior experiments at a time.

- Use a success threshold (e.g., ≥70% adherence across 2 weeks triggers “keep”).

- Retire experiments that don’t clear the threshold; try a smaller or different behavior.

Close by noting that behavior is the bridge between goals and results; wiring tiny, specific habits keeps that bridge open.

FAQs

1) What’s the difference between a weekly and a monthly review?

A weekly review is tactical: you close loops, check leading indicators, and plan blocks for the next seven days. A monthly review is strategic: you evaluate whether goals and assumptions are still right, inspect lagging results, and decide larger adjustments. Run both to balance execution with direction, so you neither spin your wheels nor drift off course. Think of weekly as “steer the car,” monthly as “choose the route.”

2) How long should reviews take?

Aim for 45–60 minutes weekly and 90–120 minutes monthly. The weekly version focuses on hygiene (inboxes, priorities, time blocks), so it’s shorter. The monthly includes deeper analysis (metrics, retrospectives, resource shifts), warranting more time. If you consistently run over, your checklist is bloated; if you finish in 10 minutes, you’re likely skipping important reflection or data capture.

3) Which tools do I need?

You can start with a calendar, a notes app, and a simple task list. Add a kanban board for flow, a basic spreadsheet or dashboard for metrics, and a habit tracker if behavior change is central. Choose the fewest tools that make the work easier. If a tool doesn’t save time or improve clarity, cut it—your system should be helpful, not heavy.

4) How do I keep from overcommitting after the review?

Use capacity math: total working hours minus meetings and admin, minus a 15–20% buffer, equals your weekly capacity. Fill only that amount with time-blocked tasks. Apply WIP limits (no more than three active projects) to prevent task thrash. If demand exceeds capacity, escalate trade-offs instead of absorbing the overload privately.

5) What if my metrics don’t improve even though I’m doing the work?

Check lead-to-lag alignment: are your leading indicators actually predictive of your target outcomes? If yes, extend the horizon; some results lag by weeks. If not, redesign the leading indicator—change the target audience, the channel, or the intensity. Also examine quality: were efforts deep, focused, and consistent, or shallow and fragmented?

6) How do I run a retrospective solo?

Use a structured prompt like Start/Stop/Continue. Write freely for five minutes in each bucket, then highlight the top one or two items per bucket. Convert each into a “try statement” with a measurable signal (“We’ll know it worked if X happens by Y date”). Review last month’s tries first; carry forward only what proved value.

7) How do I make reviews stick as a habit?

Reduce friction: schedule them at the same time, attach the checklist to your calendar invite, and prepare inputs beforehand. Use a small reward afterward, and track streaks to reinforce consistency. If you miss one, run a shortened “catch-up” version within 24 hours and reset—perfection isn’t required; persistence is.

8) Should I include personal goals in the same review?

Yes, if they compete for the same time and energy budget—which they do. Track them in the same system but on separate lists or dashboards. Allocate time blocks for personal priorities (training, study, family commitments) alongside work so trade-offs are visible. Monthly, check that the mix reflects your values.

9) How do I handle incoming requests that derail the plan midweek?

Route new requests through your prioritization and capacity guardrails. If a request is both urgent and important, reschedule a lower-value block. If it’s urgent but not important, delegate or batch. Preserve at least one major deep-work block daily; protecting that minimum keeps the week productive despite turbulence.

10) What if I feel behind and the review makes me anxious?

Name the feeling, then separate facts from fears. Use the review to choose one meaningful, doable next action per important goal and block time for it in the next 48 hours. Anxiety eases when clarity rises and progress is visible. Keep the session short and concrete; you’re designing a better week, not judging yourself.

Conclusion

A good review ritual is less about dazzling dashboards and more about steady decisions. Weekly reviews keep execution clean: you close loops, align tasks to goals, re-prioritize sanely, and plan realistic blocks. Monthly reviews protect direction: you examine results, run a blameless retro, reallocate resources, and decide which experiments to run next. Together they form a feedback loop that turns intent into outcomes. Start with the time boxes and checklists here, then evolve them to match your context. Above all, commit to cadence—the rhythm teaches you what to change. Block your first review now, attach your checklist, and give your goals the attention they deserve.

CTA: Block your next weekly and monthly reviews today—then follow these 12 steps to make them count.

References

- Getting Things Done: The Art of Stress-Free Productivity (Weekly Review concepts), David Allen Company, various editions; overview page accessed 2025 — https://gettingthingsdone.com/

- Objectives and Key Results (OKRs): The Definitive Guide, WhatMatters.com (Measure What Matters), 2023 — https://www.whatmatters.com/getting-started/okrs

- The Power of Small Wins, Teresa M. Amabile & Steven J. Kramer, Harvard Business Review, May 2011 — https://hbr.org/2011/05/the-power-of-small-wins

- Time-Blocking: A Guide to Managing Your Schedule, Cal Newport blog (Study Hacks), 2020 — https://www.calnewport.com/blog/

- Leading vs. Lagging Indicators: What They Are and Why They Matter, Atlassian Work Management, 2023 — https://www.atlassian.com/blog/productivity/leading-vs-lagging-indicators

- The Scrum Guide (Sprint Retrospective), Scrum.org, November 2020 — https://scrumguides.org/scrum-guide.html

- Kanban Guide for Scrum Teams (WIP Limits & Flow), Scrum.org & Kanban Guides, 2020 — https://www.scrum.org/resources/kanban-guide-scrum-teams

- Implementation Intentions: Strong Effects of Simple Plans, Peter M. Gollwitzer, American Psychologist, 1999 — https://doi.org/10.1037/0003-066X.54.7.493

- WOOP: Science & Practice, Gabriele Oettingen / WOOP my life, 2024 — https://woopmylife.org/science

- Eisenhower Matrix: Urgent vs Important, Atlassian Team Playbook, 2022 — https://www.atlassian.com/team-playbook/plays/priority-matrix